tl;dr: The Burger Hunter ‘dissed’ White Castle, but still wrote solid burger reviews. I studied him and used machine learning to replicate his reviews after he quit writing.

I subscribe to Secrets of the City. It’s twice-weekly email that informs you of stuff coming up in the Twin Cities. Super good.

Back in 2017, it had a regular feature called “The Burger Hunter”. This guy reviewed hamburgers around the Twin Cities, ranking them from 1-10 on Flavor, Presentation, and Originality. Even better, this guy had a very specific writing style:

As you can imagine the meat gets dominated by onion and renders that smell we all know from 50 feet away. I order mine without the O’s because they’ve been pretty much marinating in um. Topped with cheese and tbh it’s the only contrast you’ll get in the flavor on this ride. It’s so BOM bun onion meat that the cheese actually stands out.

[Quoted from the White Castle review, for which I hate him for]

Seriously, Mr. Burger Hunter had such a distinctive voice: liberal emoji’s, acronyms no one understands, sentences to make an english teacher cry:

First off it’s a hot mess. But glorious. It’s like I hope nobody’s looking kinda good. Just dig in.

[Quoted from Joe Sensor’s review]

I originally started paying attention out of hate. If you sum the three scores, he gave my beloved White Castle a 17.1, the lowest ever. Which is also bullshit: his only four in any category ever was White Castle’s presentation. Those boxes are a national treasure. I’m not sarcastic at all – just look at this photo from the review of the burger tavern:

That’s ugly as shit. Especially compared to these beauties:

[photo stolen from godairyfree.org]

Enraged, I did what any Data Scientist would do – I scraped his corpus and made a dataset. Definitely explore for yourself, but some highlights:

- Only one perfect score ever was granted to The Red Cow

- The worst two scores were the first review (Annie’s Parlour, 17.5, which he still called “A damn good burger”) and last review (White Castle, 17.1)

- Flavor exclusively exists between 7.1 and 10, making a ten point scale strange

- The third lowest score ever (Miller’s Corner Bar) is described as “Before us is probably my number 1 most favorite hunt so far”)

- To be fair, he starts his top review ever, “Have you ever woke up and said, ‘I had too much to dream last night?'” (Red Cow)

Needless to say, this guy is somewhere between, “I love hamburgers” and “BOW BEFORE YOUR HAMBURGER GOD“.

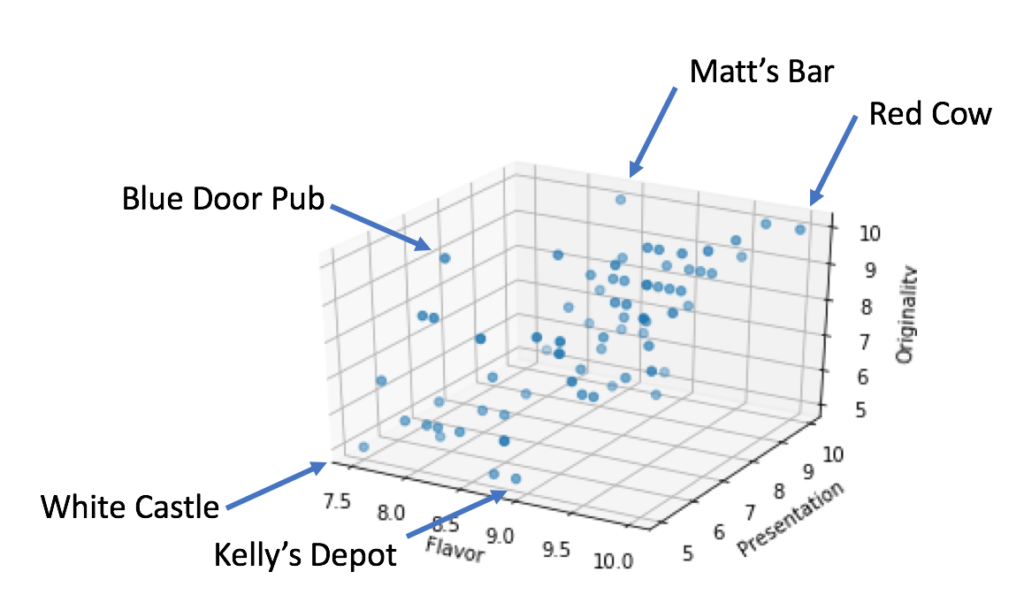

3D plots suck, but here’s every score he gave:

Really, they suck. See how Matt’s Bar looks high on presentation and low on flavor? It’s a Originality:10; Presentation;5, Flavor:10. The lightness of the dot is supposed to tell you it’s low on the presentation scale. Or not, I don’t really know. 3D plots suck. ¯\_(ツ)_/¯

More important than pivoting on 90+ reviews (such as discovering that Kelly’s Depot Bar is the best flavor, in spite of its originality or presentation), we need technology to fill the empty hole left by the Burger Hunter.

Enter Andrej Karpathy. This guy is writing about “Recurrent Neural Networks”, a brand of machine learning that’s good with sequences (like the sequence of letters you’re reading now!). Train a RNN with enough text, it can learn how to spell. Seriously – just give the model a seed of a few letters in a sentence, it guesses the next letter.

For example, let an RNN run through Shakespeare. Then, start it out by providing the first little bit of a text:

PANDARUS:

Alas, I thi

It starts guessing that the next letter is ‘n’. Shift one space over, it thinks the next letter is ‘k’. Eventually, it writes:

PANDARUS:

Alas, I think he shall be come approached and the day

When little srain would be attain’d into being never fed,

And who is but a chain and subjects of his death,

I should not sleep.

I’m glossing over details, but the takeaway here is that this is not a quote from one of Shakespeare’s plays. This RNN model is writing brand-new-Shakespeare. Let’s do this with the Burger Hunter!

I trained a model on every word the Burger Hunter ever published. It’s really entertaining how bad my model is at the beginning. Here’s an excerpt from early in training:

ed burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger is a burger

Notice, the RNN gets the idea – after the sequence ‘burg’ comes the letter ‘e’. But it’s stuck in a loop, it doesn’t realize that ‘i’ does not follow after ‘is a burger ‘. But that’s okay, this was only about twenty minutes of training. Let’s zoom forward an hour:

e is so the burger is a sertise shat conled of cheese and the burger is a big burger cutter frilled and some fresh and they don’t spedite. the bun is soft, fresh and the burger is a secret saled frinl. the burger is topped with a secret sale a burger

It’s gibberish… but not bad! After only an hour of training, it’s starting to write coherent phrases, like “the bun is soft, fresh and “.

More training!

juicy & old timey! but the burger is to food in and the bacon is a big slice and down that are so good that anla to the sime cause they are doubling to the slime chute the meat flavor is fooding and put of some garlic just a smoked galf po y

That looks like something that a human might have written a little (a lot) drunk. It falls apart at the end… but notice, there’s no repeating. It’s just shy of cohesive sentences that tell a story. This is trained off only ~90 reviews. While this was the best result I achieved back in late 2017, technology is constantly getting better.

We’ll meet again, Mr. Burger Hunter.